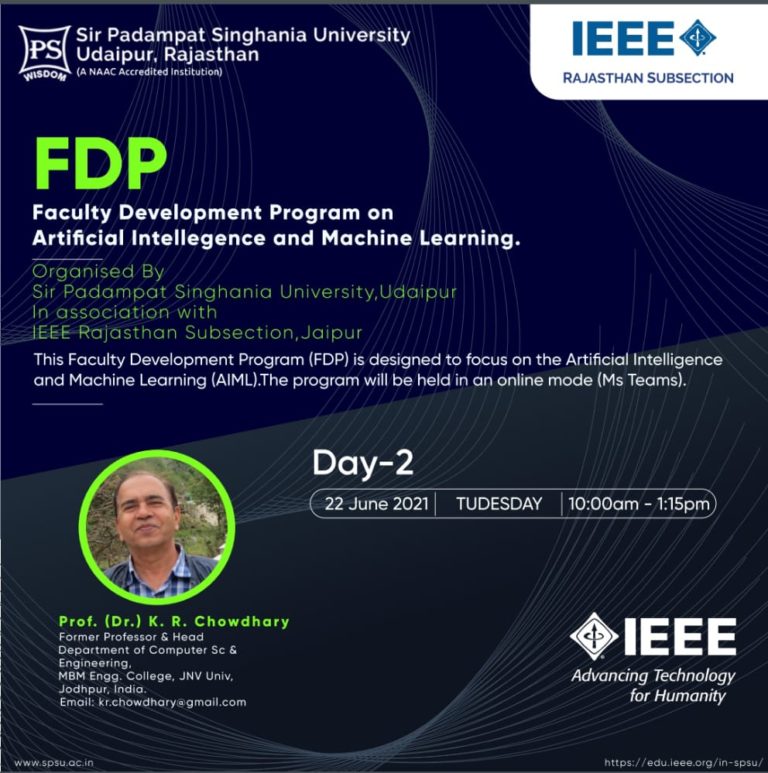

FACULTY DEVELOPMENT PROGRAM (FDP)

FDP DAY TWO

22th June 2021 10:00am to 11:30am Topic: Machine Learning Technique and Algorithm

Speaker: Dr.K. R. Chowdhary (Prof. & HOD CS Dept., MBM)

SUMMARY

Dr K. R. Chowdhary explained about the following:

A machine learns with respect to a particular task T, performance matric P, and type of experience E, if the system improves its performance P, at task T, following experience E. Five tribes of machine learning algorithms: Symbolists, connectionists, Evolutionaries, Bayesians, Analogizers. Basic Learning categories: Supervised, Unsupervised and Reinforcement learning. Reinforcement Learning: Human beings always learn by interactions with the environment/teacher, which are cause and effect relations. Four components of RL: A policy, A value mapping, A reward function, A model of environment. Decision Tree Algorithm is to build a decision tree. Some Examples: Decision-tree for financing a client, Decision-tree for word sense disambiguation (WSD). The training database is accessed extensively while the tree is constructed. If the training database does not fit the memory, an efficient data-access method is needed to achieve the scalability. Hebb’s rule is cornerstone of connectionism: the fact that knowledge is stored in connections between neurons. The neurons that fire together wire together. Connectionist’s representations are distributed, each concept is represented by many neurons. Also, Details about: Learning to cure Cancer (Symbolists), Symbolists: Principle of Inverse Deduction, Classical Deduction V/s Inverse Deduction, Decision-tree to cut Computation (Symbolist).

SESSION 2

22th June 2021 11:45am – 1:15pm Topic: Unsupervised Machine Learning Technique

Speaker: Dr.K.R.Chowdhary (Prof. & HOD CS Dept., MBM)

SUMMARY

Dr. K.R.Chowdhary explained about the following:

Traditional Machine learning algorithms: Genetic algorithm (GA), Evolutionary algorithms, Bayesian’s learning, Bayes Theorem, Analogy based Learning, Incremental algorithms. GA (Genetic Algorithm) works based on three basic operations on population selection of fittest member, cross-over, and mutation. Bayesian’s learning – Bayes algorithm: It is a path of best learning. Bayesian inference is an important technique in statistics, and especially in mathematics statistics. Bayes theorem works by probabilities, it works on as we have increased evidence, we will be near to the solution. Analogizers: Mapping new situations: SVM and KNN. Big Data Categories: Nature, classification. The incremental algorithms are useful when the pattern-set to be used is large, as there are no iterations hence, the execution time will become less. The Association rules are a set of significant correlations, frequent patterns, associations, or causal structures from data sets found in several types of databases. The Association rule mining has been efficiently applied to many different domains like market basket analysis, astrophysics, crime prevention, fluid dynamics, and counter-terrorism. Three commonly used ways to measure the association, which include showing the strength of the association: Support, Confidence, Lift.

SESSION 3

22th June 2021 2:30pm -04:30pm Topic: Practical Session on Machine Learning

Speaker: Mrs. Jalpa Mehta (Research Scholar) at sir padampat Singhania university

SUMMARY

Mrs. Jalpa Mehta explained about the following:

Clustering: It is a process of dividing the dataset into groups consisting of similar data points. Clustering Applications: Customer segmentation, Fraud detection etc. Clustering Methods: Partitioning Method, Hierarchical Method, Density-based Method, Grid-Based Method, Model-Based Method. K-means clustering is an unsupervised machine learning algorithms and it tries to classify data without having first been trained with labelled data. K means clustering Algorithm: Initialization, Assignment, Updating, Repetition. Logistic regression is a predictive analysis algorithm and based in the concept of probability. Logistic regression transforms its output using the logistic sigmoid functions to return a probability value.

Examples: email spam or not spam, online transactions fraud or not fraud, tumour malignant or benign.

Problem-1: K means implementation from scratch using Iris dataset

Problem-2: K means implementation using sklearn in-built model

Problem-3: Logistic regression on the Titanic dataset